Those advances that have marked a turning point for humanity have always been scorned by critics. That’s because imagination and inventiveness seem to have found their counterpart in the distrust and fear of glimpsing into the unknown. The old dispute between those who dare to explore untrodden paths and those who are wary of new ways.

There are many examples of these disputes throughout history that seen now may seem absurd, but that at the time were the subject of bitter quarrels. The invention of writing brought with it furious criticism from those who thought that knowledge would perish since it was not necessary to memorize things. Several centuries later, the printing press caused some to throw their hands up, thinking that the mass printing of books would detract from their value.

Yet others who disapproved of the appearance of new machines were the Luddites. At the dawn of the Industrial Revolution, they burned and blew up machinery, thinking that it would take their jobs. And more recently, radio and television also stirred controversy by prophesying a future in which people would stop reading newspapers and movie theaters would be deserted.

Another critical juncture has been the emergence and ubiquitousness of the Internet in our lives, and many business models have had to adapt to this new reality or resign themselves to obsolescence. Some said that the decline in the consumption of physical media due to the Internet was going to destroy the music or movie industries. But far from being extinct, more music and audiovisual products are consumed now than ever before through streaming platforms, to say nothing of how these platforms finance and distribute proposals that we would otherwise never have seen.

At this digital juncture, we have before us a scenario that is characterized by the appearance of increasingly dramatic changes. If we look back over just one decade, the way we consume information and communication has changed in a way that we could never have imagined, and by now it seems that what is yet to come is even more unpredictable.

Artificial Intelligence as the final frontier

One of the most common terms being tossed around lately in reference to technology is artificial intelligence, which can be defined as the ability of a machine to imitate functions of the human mind. AI research and implementation is a priority at many companies, such as Uber with its autonomous cars, smart search engines from Google or the facial recognition of the iPhone.

Artificial intelligence can be thought of as a large neural network that is capable of collecting and processing data exponentially. Put simply, this will mean the emergence of tools that will completely change all human activities and capabilities to such an extent that debates have already been held at the European Parliament about whether or not to equip machines with an “electronic personality”. Debates that in some cases have gone even further, as in the case of Sophia, the first robot in history to have a citizenship.

https://www.youtube.com/watch?v=zuFeCQl5VI4

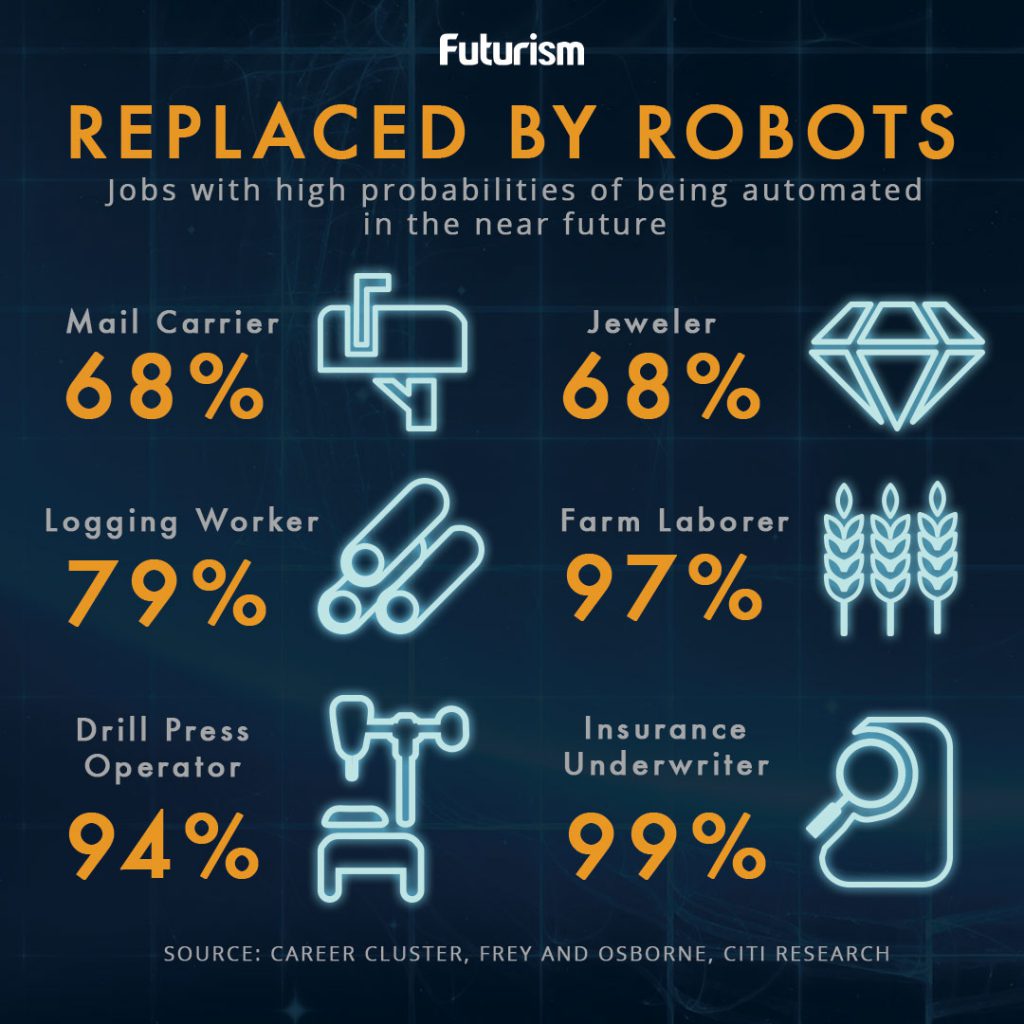

In the short term, machines will be able to carry out many of the jobs that we humans now do. These will be the most repetitive and predictable jobs, such as at assembly lines, fast food restaurants and driving vehicles.

And in the not too distant future, it is estimated that machines will surpass us in intelligence -the so-called singularity- around the year 2029, as Ray Kurzweil, Google’s Director of Engineering, has predicted.

Admittedly, such a context, in which machines will take our jobs or outsmart us, can give us pause and drive us to be the new Luddites. It is also true that some science fiction stories and films such as The Terminator and The Matrix are doing little to ease our concerns of thinking that giving machine its own conscience is a good idea.

However, solutions to this future problem are already being addressed, such as a potential initiative to have machines pay taxes just like anyone else, and protocols so that persons are not placed in dangerous situations. In this regard, the role of Joichi “Joi” Ito it is very interesting. He is a professor at the MIT Media Lab and has created a course on The Ethics and Governance of Artificial Intelligence, in which this problem is approached from a technological, moral and social point of view.

Nobody can accurately predict the future, but it remains in the hands of those who know how to best adapt to new scenarios and, in a reality where machines will be able to perform the most repetitive and mechanical jobs, it seems that curiosity will be more essential than ever. Especially when many educational experts agree that the essential profile for succeeding in the post-singularity world must be multi-disciplinary, creative, flexible and have great social skills. Properties that are, strangely enough, very human.